AI-Native Prevention for Tomorrow's Threats

Powered by 30+ years of human expertise, 11 Research and Development Centers around the globe and local customer care.

Powered by 30+ years of human expertise, 11 Research and Development Centers around the globe and local customer care.

SAVE 15% OFF

Renew, enlarge, upgrade and more

099.9%

Eliminate risks

99.9% Malware protection rate against infection by malicious files. Minimize risk of data breach or cyber-attacks.

0100%

Software integrity

Resistance against most prevalent attacks.

005X

Lighter on system

With a lighter system footprint, your employees can use their PCs more smoothly while carrying out their daily task.

Stefan Pistorius

EDP and Administration Manager Pharmaceuticals

Antivirus, worry-free payments, and online privacy protection including anti-phishing and Wi-Fi protection.

For online purchase, please choose correct number of devices

Elevate your protection with Password Manager, sensitive file Encryption, and cutting edge Threat Detection.

For online purchase, please choose correct number of devices

Get the best security plan available, complete with VPN and Identity protection for total peace of mind.

Modern multilayered endpoint protection featuring strong machine learning and easy-to-use management

For online purchase, please choose correct number of devices

Vulnerability & Patch Management Actively track & fix vulnerabilities in operating systems and applications across all endpoints.

Available as on demand upgrade providing additional protection layer. Click on the plus sign and contact a sales person to receive an offering tailored to your individual needs. No commitment.

Mobile Threat Defense Robust security for all Android and iOS mobile devices within the organization. Equip your mobile fleet with Antimalware, Anti-Theft and MDM capabilities.

Available as on demand upgrade providing additional protection layer. Click on the plus sign and contact a sales person to receive an offering tailored to your individual needs. No commitment.

Mail Server Security An additional layer of security, protecting Exchange and IBM email servers from threats entering the network on top of the standard endpoint and file server protection.

Features advanced anti-phishing, anti-malware, and anti-spam combined with cloud-powered proactive threat defense. Provides you with robust quarantine management and rule definition/filtering system.

Prevents ransomware and other email-borne attacks without compromising email's speed.

Available as on demand upgrade providing additional protection layer. Click on the plus sign and contact a sales person to receive an offering tailored to your individual needs. No commitment.

Cloud App Protection Advanced protection for Microsoft 365 and Google Workspace apps, with additional proactive threat defense.

Available as on demand upgrade providing additional protection layer. Click on the plus sign and contact a sales person to receive an offering tailored to your individual needs. No commitment.

Multi-Factor Authentication Single-tap, mobile-based multi-factor authentication that protects organizations from weak passwords and unauthorized access.

Available as on demand upgrade providing additional protection layer. Click on the plus sign and contact a sales person to receive an offering tailored to your individual needs. No commitment.

Premium Support Essential Prioritized, accelerated and guaranteed support from experts.

Best-in-class endpoint protection against ransomware & zero-day threats, backed by powerful data security

For online purchase, please choose correct number of devices

Available as on demand upgrade providing additional protection layer. Click on the plus sign and contact a sales person to receive an offering tailored to your individual needs. No commitment.

Vulnerability & Patch Management Actively track & fix vulnerabilities in operating systems and applications across all endpoints.

Available as on demand upgrade providing additional protection layer. Click on the plus sign and contact a sales person to receive an offering tailored to your individual needs. No commitment.

Cloud App Protection Advanced protection for Microsoft 365 and Google Workspace apps, with additional proactive threat defense.

Available as on demand upgrade providing additional protection layer. Click on the plus sign and contact a sales person to receive an offering tailored to your individual needs. No commitment.

Mail Server Security An additional layer of security, protecting Exchange and IBM email servers from threats entering the network on top of the standard endpoint and file server protection.

Features advanced anti-phishing, anti-malware, and anti-spam combined with cloud-powered proactive threat defense. Provides you with robust quarantine management and rule definition/filtering system.

Prevents ransomware and other email-borne attacks without compromising email's speed.

Unlock a higher protection tier with the added advantage of EDR included. Click on the plus sign and reach out to our dedicated sales team to explore a tailored offering that meets your unique requirements. No commitment.

Starting at 25 devices.

Extended Detection and Response Additional platform capability to proactively detect threats, effectively identify anomalous behavior in the network and realize timely remediation, preventing breaches and business disruption.

ESET Inspect, the XDR-enabling cloud-based tool, provides outstanding threat and system visibility, allowing risk managers and security professionals to perform fast and in-depth root cause analysis and immediately respond to incidents.

Available as on demand upgrade providing additional protection layer. Click on the plus sign and contact a sales person to receive an offering tailored to your individual needs. No commitment.

MDR Service 24/7 managed detection and response service combining AI and human expertise to achieve unmatched threat detection and rapid incident response.

Available as on demand upgrade providing additional protection layer. Click on the plus sign and contact a sales person to receive an offering tailored to your individual needs. No commitment.

Multi-Factor Authentication Single-tap, mobile-based multi-factor authentication that protects organizations from weak passwords and unauthorized access.

Available as on demand upgrade providing additional protection layer. Click on the plus sign and contact a sales person to receive an offering tailored to your individual needs. No commitment.

Premium Support Essential Prioritized, accelerated and guaranteed support from experts.

Complete, multilayered protection for endpoints, cloud applications and email, the number one threat vector

Available as on demand upgrade providing additional protection layer. Click on the plus sign and contact a sales person to receive an offering tailored to your individual needs. No commitment.

Multi-Factor Authentication Single-tap, mobile-based multi-factor authentication that protects organizations from weak passwords and unauthorized access.

Available as on demand upgrade providing additional protection layer. Click on the plus sign and contact a sales person to receive an offering tailored to your individual needs. No commitment.

Premium Support Essential Prioritized, accelerated and guaranteed support from experts.

Price valid for the first term only. The price displayed is valid for the first term. If a savings amount is shown, it describes the difference between the first term’s price and the official list price. While price is subject to change, we will always send you a notification email in advance.

ESET researchers are among the most active contributors to MITRE ATT&CK®, a global knowledge base of adversary tactics. They have discovered previously unknown cyberespionage groups like Sandworm, MoustachedBouncer, Turla and more.

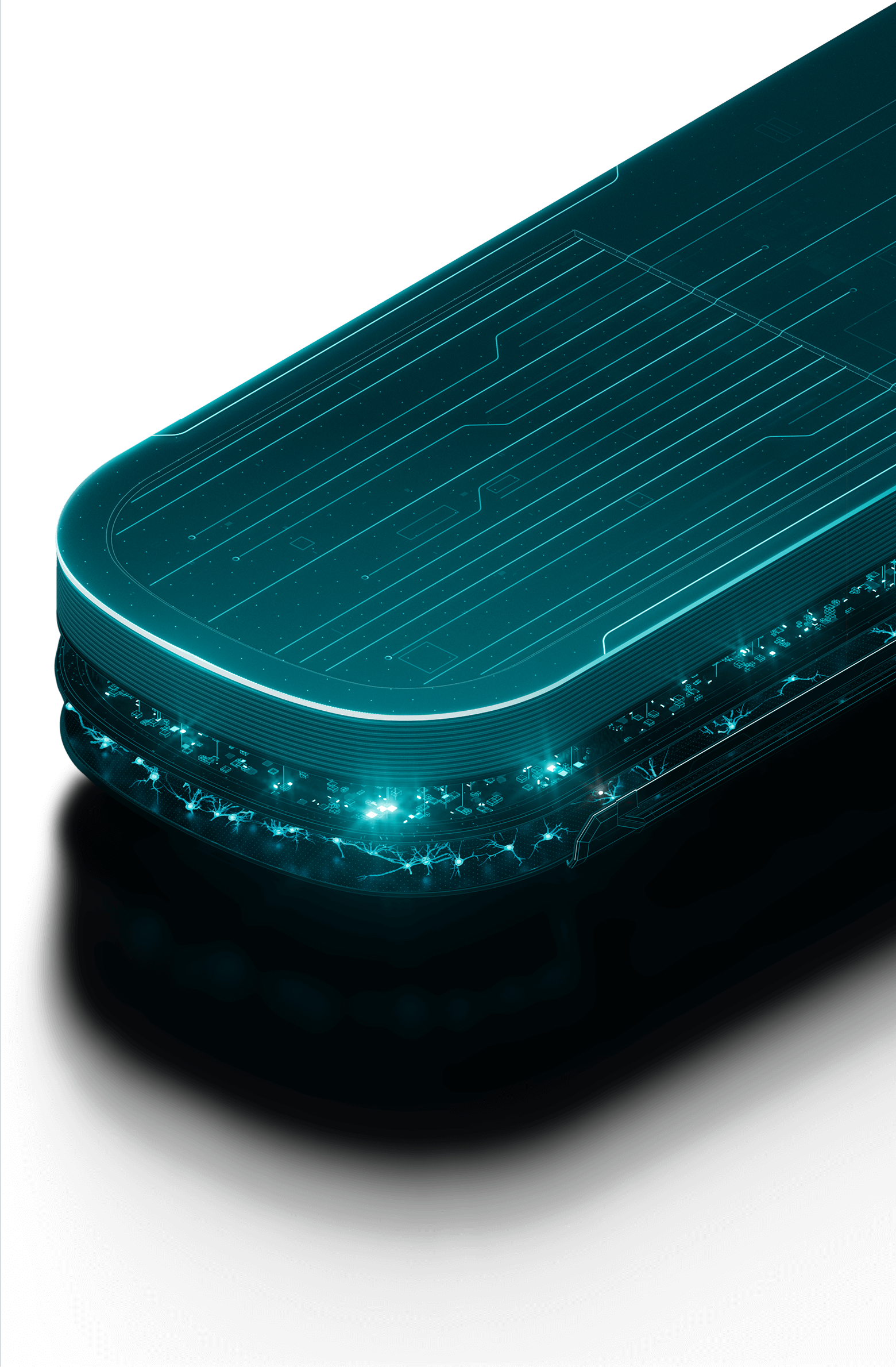

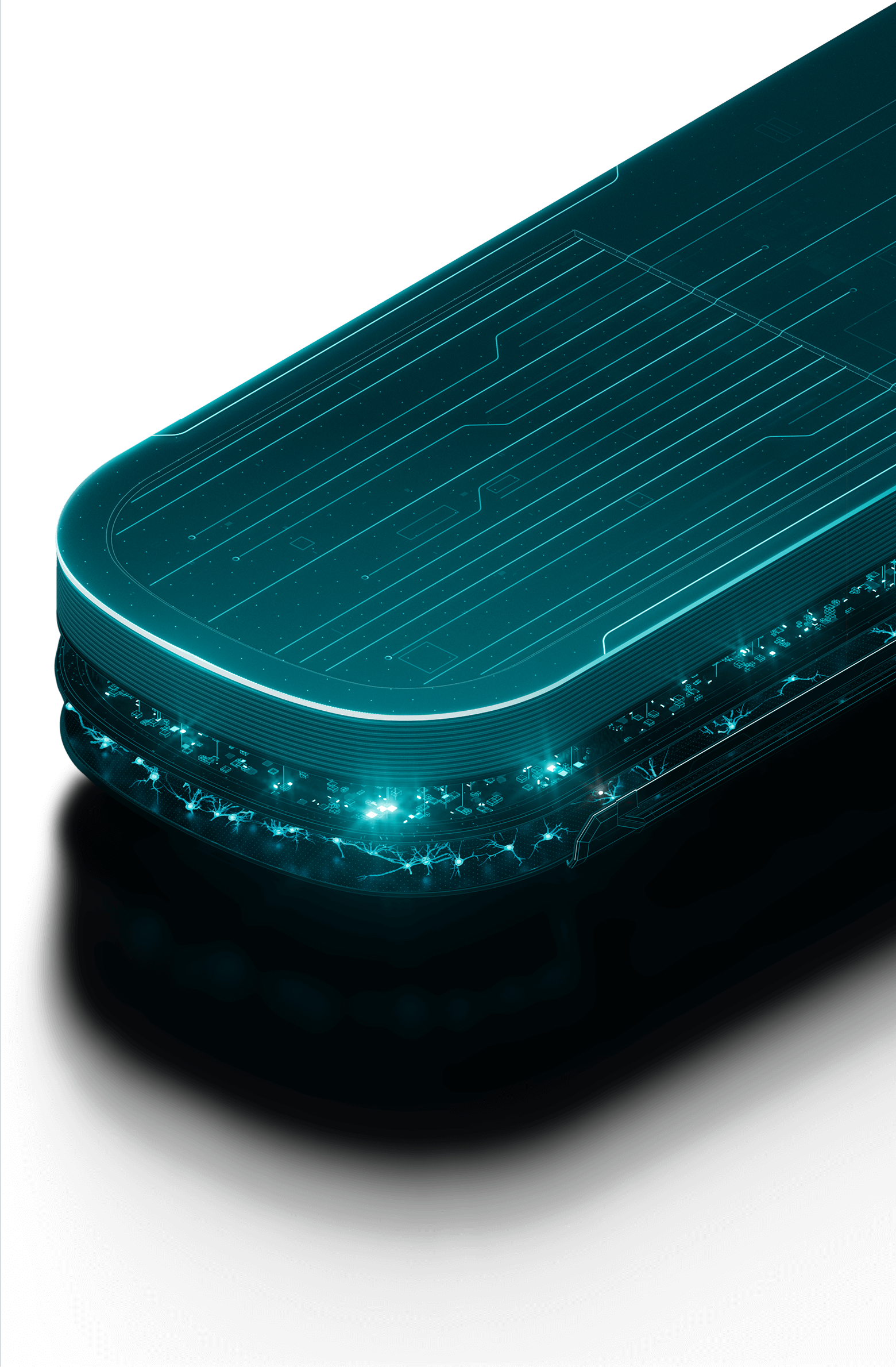

Continuous innovation has allowed ESET to develop a multitude of unique, proprietary, cloud-powered, and multi-layered protection technologies that work together as ESET LiveSense.

ESET, a global leader in cybersecurity, is proud to announce that the company has won a 2023 SC Award in the Excellence Award category for Best Customer Service

MULTI-LAYERED PROTECTION

16 layers safeguard 1+ billion internet users worldwide

LIGHT ON RESOURCES

Top performer for 11 years in AV-Comparatives testing

EASY TO USE

Verified by consumer reviews on Trustpilot

AWARD-WINNING

100+ consecutive VB100 awards

SUPPORT IN YOUR LANGUAGE

700+ customer care experts

SOCIALLY RESPONSIBLE

Benefiting society for more than 30 years

ESET RESPONDS TO THE UKRAINIAN CRISIS AND RELATED CYBERATTACKS.

We are offering a helping hand on several fronts.

Find out more

Find everything you need at our customer portal

Update your subscription and

eStore account information.

Renew, upgrade or add

devices to your ESET license.